Level of Difficulty of Questions Staar Reading

After a cursory interlude, it'due south time to become back to the blog serial I started recently about analyzing assessments.

- In the first post, I shared the importance of excavation into the questions, not simply the standards they're correlated to.

- In the 2d post, I talked virtually how understanding how a examination is designed can help u.s. amend understand the results nosotros go.

- In the third post, I shared how I learned to organize assessment data by item difficulty and the implications for supporting our students.

- In this post, I'd like to talk about another way to wait at cess information to uncover areas of celebration and areas of exploration.

Permit'southward get started!

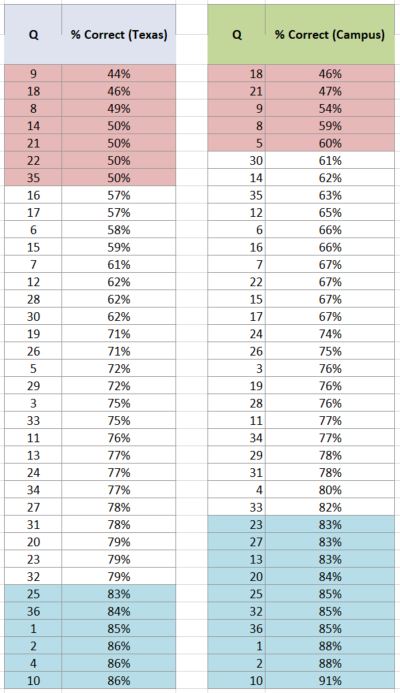

In my previous postal service I shared the order of questions based on detail difficulty for the 2018 5th grade STAAR for the entire country of Texas. Here information technology is again:

Co-ordinate to this ordering, question 9 was the near difficult item on the exam, followed by question eighteen, question eight, and then on downwardly to question 10 as the least hard detail (tied with questions 2 and 4).

Here's my question: What is the likelihood that any given campus beyond the country would take the verbal same order if they analyzed the detail difficulty only for their students?

Hopefully you're like me and you're thinking, "Not very likely." Allow's check to see. Here's the item difficulty of the state of Texas compared to the item difficulty at just one campus with about 80 students. What exercise you notice? What do you wonder?

Some of my noticings:

- Questions 8, ix, eighteen, and 21 were some of the most difficult items for both the state and for this particular campus.

- Question 5 was non detail difficulty for the land of Texas every bit a whole (it's about midway down the list), merely it was surprisingly difficult for this detail campus.

- Question 22 was one of the most difficult items for the country of Texas equally a whole, but it was not particularly difficult for this campus (it'southward almost halfway downwards the listing).

- Questions 1, 2, 10, 25, and 36 were some of the least hard items for both the state and for this particular campus.

- Question four was tied with questions two and 10 for being the least difficult particular for the state, merely for this particular campus information technology didn't fissure the top v list of to the lowest degree difficult items.

- There were more questions tied for existence the most hard items for the state and more questions tied for being the least difficult items for this particular campus.

My takeaway?

What is difficult for the state as a whole might not be difficult for the students at a particular school. Likewise, what is not very difficult for the state as a whole might take been more difficult than expected for the students at a particular school.

Simply is there an easier style to place these differences than looking at an particular on one list and and then hunting it down on the second list? In that location is!

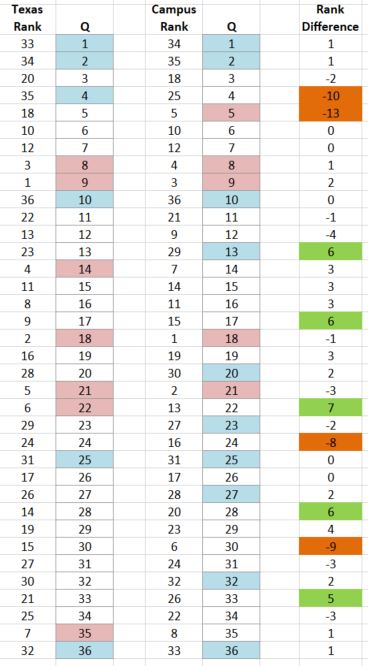

This image shows the item hard rank for each question for Texas and for the campus. The final column shows the difference between these rankings.

Only in case you lot're having trouble making sense of it, let's just wait at question 9.

As you can see, this was the number 1 most difficult item for the state of Texas, but it was number 3 on the same listing for this campus. As a result, the rank difference is 2 because this question was 2 questions less hard for the campus. Notwithstanding that's a pretty small difference, which I interpret to mean that this question was generally virtually as difficult for this campus as it was for the country as a whole. What I'grand curious most and interested in finding are the notable differences.

Let'south wait at another example, question 5.

This is interesting! This question was number 18 in the item difficulty for Texas, where 1 is the most difficult and 36 is the least difficult. Nonetheless, this same question was number v in the list of questions for the campus. The rank difference is -thirteen because this questions was xiii questions more than hard for the campus. That's a huge difference! I phone call questions like this areas of exploration. These questions are worth exploring because they buck the tendency. If instruction at the campus were like the rest of Texas, this question should have been just every bit hard for the campus than for the residue of the state…simply information technology wasn't. That's a large ruby-red flag that I want to get-go earthworks to uncover why this question was so much more difficult. There are lots of reasons this could exist the example, such every bit:

- Information technology includes a model the teachers never introduced their students to.

- Teacher(s) at the campus didn't know how to teach this particular concept well.

- The question included terminology the students hadn't been exposed to.

- Instructor(due south) at the campus skipped this content for one reason or another, or they quickly glossed over it.

In case y'all're curious, here'due south question 5 and then you tin can see for yourself. Since yous weren't at the school that got this data, your guesses are even more than hypothetical than there's, just it is interesting to wonder.

Let me be clear. Exploring this question isn't about placing blame. It's about uncovering, learning what can be learned, and making a plan for future instruction and so students at this campus hopefully don't observe questions like this and so difficult in the future.

Let'south look at i more question from the rank guild list, question 22.

This is sort of the reverse of the previous question. Question 7 was much more than difficult for the state equally a whole than information technology was for this campus. So much so that it was 7 questions less hard for this campus than it was for the country. Whereas question five is an area of exploration, I consider question 7 an surface area of celebration! Something going on at that campus made it and then that this particular question was a lot less difficult for the students at that place.

- Perhaps the teachers taught that unit of measurement really well and student understanding was solid.

- Perhaps the students had encountered some problems very similar to question seven.

- Perchance students were very familiar with the context of the problem.

- Maybe the teachers were peculiarly comfortable with the content from this question.

Again, in case y'all're curious, here'south question 22 to go yous wondering.

In Texas this is chosen a griddable question. Rather than being multiple choice, students have to grid their answer like this on their answer canvass:

Griddable items are commonly some of the about hard items on STAAR because of their demand for accurateness. That makes it even more than interesting that this item was less difficult at this particular campus.

Nosotros can never know exactly why a question was significantly more than or less difficult at a particular campus, but analyzing and comparing the rank orders of detail difficulty does bring to the surface unexpected, and sometimes tantalizing, differences that are well worth exploring and jubilant.

Simply this week I met with teams at a campus in my district to get over their own campus rank order data compared to our commune data. They very chop-chop generated thoughtful hypotheses about why sure questions were more difficult and others were less then based on their memories of terminal twelvemonth'due south teaching. In coming together with their 5th grade team, for example, we were surprised to find that many of the questions that were much more hard for their students involved incorrect answers that were well-nigh probable caused by calculation errors, especially if decimals were involved. That was very eye opening and got us brainstorming ideas of what we can piece of work on together this year.

This post wraps up my serial on analyzing cess data. I might follow up with some posts specifically well-nigh the 2018 STAAR for grades 3-v to share my analysis of questions from those assessments. At this indicate, however, I've shared the big lessons I've learned about how to look at assessments in new ways, specially with regards to exam design and particular difficulty.

Before I go, I owe a big thank you to Dr. David Osman, Manager of Enquiry and Evaluation at Circular Stone ISD, for his help and support with this work. And I as well want to thank you lot for reading. I hope you've come away with some new ideas you tin try in your own work!

This mail service is the third in a serial where I'thou sharing how I've inverse the means that I look at assessments and assessment information.

- In the showtime post, I shared the importance of digging into the questions, non just the standards they're correlated to.

- In the second mail, I talked nigh how agreement how a test is designed can help usa improve understand the results we go.

- In this post, I'd like to share ane of the ways I've learned how to analyze assessment results.

Let's get started!

Exercise you know what the well-nigh hard particular on an cess is?

- Is it the one with a pictograph with a scaled interval that involves combining the values from several categories?

- Is it the multi-stride story trouble involving improver, subtraction, and multiplication?

- Is it the one about matching a gear up of disorganized data with the right dot plot out of iv possible respond choices?

Here'southward the thing I learned from Dr. Kevin Barlow, Executive Director of Research and Accountability in Arlington ISD, no matter how much time and effort someone spends designing an item, from crafting the wording to choosing just the right numbers, the only way to determine the difficulty of an item is to put information technology in front of students on an assessment. After students are finished, take a expect at the results and discover the question where the most students were wrong.

You found information technology! That'southward the most difficult item on the cess.

Through their responses, our students volition tell usa every unmarried fourth dimension which question(southward) were the near difficult for them. It's our responsibility to analyze those questions to determine what made them so challenging.

Fortunately, the Texas Education Agency provides this information to us in Statewide Item Assay Reports. Unfortunately, it starts out looking similar this:

This is a bang-up first pace, but information technology's not terribly useful in this format. You tin't glance at it and pick out anything meaningful. However, if I re-create this data into a spreadsheet and sort it, it becomes so much more useful and meaningful:

Now I've sorted the questions based on how students performed, from the item nearly students answered incorrectly (#9 was the about difficult item on this test) to the item the least number of students answered incorrectly (#2, #4, and #10 were tied for being the least difficult items on this exam). Information technology'southward interesting to retrieve that #9 and #ten, back to back, turned out to be the least and nearly difficult for fifth graders across the country of Texas!

The items highlighted in red were thenear difficult items for fifth graders. Retrieve, information technology doesn't matter how the questions were designed. These items were the most difficult because the least number of students answered them correctly.

The items highlighted in blue, on the other mitt, were the least difficult items for 5th graders in Texas. I'm intentional about calling them the least difficult items. We might be inclined to phone call them the easiest items, but that obscures the fact that these questions were still hard enough that 14-17% of all Texas 5th graders answered them incorrectly. To put some real numbers with that, anywhere from 56,000 to 68,000 students answered these "easy" items incorrectly. These items were conspicuously difficult for these students, but they were the to the lowest degree hard for the population of 5th graders every bit a whole.

Now what?

We might be inclined to go to the items in cherry and kickoff analyzing those first. Great idea! But for whom?

Well, since they were the virtually difficult items, meaning the most students missed them, we should use these items to teach all of our students, right? Conspicuously everyone had issues with them!

I'chiliad going to disagree with that.

These items were difficult even for some of our strongest students. If they struggled, so the last affair I want to do is bring this level of claiming to all of my students, especially those who struggled throughout the test. Rather, I'll analyze the most difficult items to go ideas to provide challenge to my higher performing students. These kinds of questions are clearly structured in a way that gets them thinking, challenges them, and perhaps even confuses them. That's good information to know!

(Please don't misinterpret this as me saying that I don't desire to challenge all students. Rather, I desire to ensure all students are appropriately challenged, and that's what I'm trying to identify through this kind of analysis. Read on to see what I hateful.)

Only what about students who struggled throughout the examination? For those students, I'yard going to clarify the to the lowest degree hard items. In this case, fourteen-17% of students in Texas answered even these items incorrectly. These items posed a claiming for quite a number of students, and I want to analyze the items to figure out what made them challenging for these students.

Let's pretend that this is school data instead of Texas data, and permit'south pretend we're a team of sixth grade teachers analyzing 5th form information for our 200 6th graders. That would mean at least 28-34 students in our 6th grade did not do well on these to the lowest degree difficult items when they took 5th grade STAAR last spring. That'due south a pretty significant number of kids! They could for certain benefit from some course of intervention based on what we learn from analyzing these items.

And that'southward where I'chiliad going to leave this in your easily! Hither is a document where I've collected the most difficult and least difficult items from the 2018 fifth class STAAR. These are the actual test questions along with the percent of students who selected each answer choice. Spend a little time analyzing them. Here are some questions to guide you:

- What are the features of each question? (How is the question constructed? What are its components and how are they put together in the question?)

- Why do you suppose the features of a given question made it more/less difficult for students?

- What mathematical knowledge and skills are required to be successful with each question?

- What non-mathematical knowledge and skills are required to be successful with each question?

- What can you learn from analyzing the distractors? What do they tell you about the kinds of mistakes students made or the misunderstandings they might take had?

- What lessons can we acquire from these questions to guide us in how we support our students? (We don't want to teach our students these exact questions. That's non terribly useful since they won't exist taking this exact test again. Rather, seek out full general themes or trends that you find in the questions that can guide your classroom education and/or intervention.)

I've opened up the document so that anyone can comment. If y'all'd similar to share your thoughts on whatever of the questions, please do! I look forrard to reading your thoughts about the least and most difficult items on the 2018 5th course STAAR.

I'thousand giving y'all a very small fix of questions to analyze right at present. You may or may not be able to generalize much from them depending on your own experiences analyzing assessment items. However, it's worth doing regardless of your experience, because now the repertoire of items you lot've analyzed volition be that much larger.

Equally for myself, I've been analyzing assessment items like this for several years. What I'd like to practice in my next post is share some of the lessons I've learned from this analysis across multiple years. I do feel similar in that location are consistent trends (and a few surprises) that can inform our work in means that simultaneously align with high-quality math pedagogy (because ultimately this is what I intendance much more about than testing) while also ensuring students are given the supports they need to succeed on mandatory high stakes tests (because they are a fact of life and it's our responsibility to ensure students, particularly those who are relying on school for this support, are prepared for them).

Every spring thousands upon thousands of Texas students have the State of Texas Assessments of Bookish Readiness (STAAR for brusk). It's a 1-day snapshot meant to evaluate a year of learning within a field of study expanse. Fifty-fifty though many disagree with one-fourth dimension events every bit assessments of learning, the fact of the affair is that they are a reality for us and our students. Because these assessments conduct and so much weight, we pore over the data they generate, frequently looking for standards where our students performed poorly so we can identify what to focus on in our instruction and intervention.

Simply what if I told you this well-intentioned practise may be sending us in unproductive directions? Rather than focusing on what our students really demand, we may exist spending time on topics and/or skills that are not the priority.

Allow me illustrate what I mean with a story. I was working with a 4th course team after a commune benchmark nosotros call STAAR Set up. Every spring in my district we give our students a released STAAR to gauge readiness for the actual STAAR coming up in May. Afterward, teams analyze the data to determine which topics to revisit and which students to put into intervention groups.

Every bit I met with this 4th grade squad, they showed me a list of the low-performing TEKS (Side annotation: this is what nosotros call our standards in Texas – the Texas Essential Knowledge and Skills, TEKS for short) they had identified afterwards analyzing the STAAR Prepare data. One of the TEKS jumped out at me immediately because I was familiar with the exam:

TEKS four.4A add and subtract whole numbers and decimals to the hundredths place using the standard algorithm;

I asked them to tell me more, and the team told me they had identified students who performed poorly on the questions correlated to this standard. They created an intervention group with these students to work on adding and subtracting whole numbers and decimals to make sure they could do these computations accurately.

I followed upwards with a question, "Have you looked at the actual questions correlated to these TEKS?" Because they were looking at so much data and so many standards, they hadn't gotten back into the test. Instead they'd just been identifying high-priority TEKS based on student performance on the questions.

I pulled upwards the test and showed them this question that had immediately come to mind when they told me they were making a group focused on TEKS four.4A:

Source: Texas Education Agency, STAAR Math, Grade 4, Item 34

Take a moment and analyze the question.

- Can you see how it involves adding and/or subtracting with whole numbers and/or decimals?

- But what other skills are involved in answering this question correctly?

- What features of the problem might have made it more difficult for the students to respond correctly?

As it turns out, this was an incredibly difficult problem for students! When it was given to students on the actual STAAR in jump 2016, only 43% of students across the state of Texas were able to answer correctly. That means 57% of Texas 4th graders, or roughly 209,390 students, couldn't find the total cost of iii items in a shopping basket. That's…concerning.

In my own schoolhouse district, we used the 2016 released STAAR equally our STAAR Gear up in spring 2017. This immune me to collect data Texas doesn't make available to everyone. When we gave the exam in spring 2017, the problem was nearly as hard for our students. About 48% of students in my district answered it correctly. I was also able to determine this was the 6th most difficult particular on the unabridged test of 48 questions!

What's going on? A lot actually, for such a brusque question. For starters, key information is spread across two sentences. The first sentence of the problem indicates the quantities of items purchased – i chapeau and two skirts. The 2nd sentence indicates their prices. This is subtle, merely separating that information across two sentences upped the level of difficulty significantly for 9 and 10 year olds. Students who are non reading closely can rapidly jump to the conclusion that they only need to add together the two prices shown without realizing that one of those prices needs to be used twice.

The 2nd feature of this problem that ups the difficulty is the fact that it is an open up response question, non multiple option. On this kind of question, a student's respond has to be absolutely 100% authentic. If they're off past even 1 penny, the answer is marked wrong. No pressure level, kids!

I was curious which feature made the problem more difficult for the students in my district, then I dove into the data. One thing I had bachelor that Texas doesn't release is the bodily answers every student submitted for this problem. I was able to analyze roughly iii,600 answers to see what students were doing. Here's what I establish out.

While only 48% of students got this question correct, there was a chunk of students whose answers were in the ballpark. These are kids who likely made a small adding error. Unfortunately, if I calculate the percent of students who got it correct or reasonably close, that just brings information technology upward to 51% of our fourth graders. That'due south not terribly impressive.

Then what was anybody else doing? Hither's where information technology gets interesting. I predicted that these students but found the cost of 1 chapeau and 1 skirt, and it turns out that'due south exactly what 33% of students in my district did. Most i,200 students failed to comprehend that the total cost is composed of a lid, a skirt, and some other skirt.

Going dorsum to the team I was working with, I asked, "So now that we've analyzed this question, do you think the issue is that your students are struggling with adding and subtracting whole numbers and decimals?" We talked virtually it and they agreed that the bigger issue is how their students read and encompass discussion bug.

Looking simply at the standards is a very limiting view of analyzing data. There are frequently many unlike ways to appraise a standard, and if we don't accept the time to look at the verbal questions our students interact with, we might be missing critical information. Had this team washed an intervention on pure improver and subtraction of whole numbers and decimals their kids would accept gotten better at those skills for certain. Simply is that really what they needed?

Over the past yr, I've been analyzing assessment data differently than in the past. In follow upwards posts I'd like to share some of that with you. In the meantime, delight dive into your assessments and analyze those questions, not only the standards. You'll hopefully come away with a truer film of what'due south challenging your students then that you lot can more accurately target with what and how to back up them.

I don't know about you, but hither in Texas we've got a state math test in grades 3, 4, and 5 coming up shortly. The 5th form test is taking place in mid-April followed by the tertiary and 4th grade tests in mid-May. In my school district, nosotros used to cease instruction for one to two weeks prior to the test to focus on review. It'due south e'er rubbed me the wrong way, and this year we changed that. If you lot want to read more most our rationale for doing that, I recommend reading Playing the Long Game, a post I wrote on my district blog. I besides recommend checking out my Ignite talk from NCSM 2017. The work I'thousand sharing here has been a hazard for me to put into practice the principles I shared in that talk.

If yous don't have time for all that right at present and yous'd rather check out the review activities I've created and get access to them for yourself, read on!

This year, with the assist of our commune instructional coaches, I put together collections of 15-20 infinitesimal screw review activities that can exist used daily for a month or so before the land exam to review critical standards and fix studentswithout interrupting the momentum of regular math didactics. Hither they are:

- Grade three activities

- Form 4 activities

- Grade 5 activities

(Note: If you want to modify an activity, you lot are costless to do so. Either make a copy of the file in your Google drive or download a re-create to your estimator. You will have full editing rights of your copy.)

When you look at an activity, it might look brusk. You might ask yourself, "How could this peradventure take 15-20 minutes?" Good question! These activities are designed for pupil soapbox. Students can and should be talking regularly during these activities. The goal is for students to exist noticing, wondering, questioning, analyzing, sharing, and convincing each otherout loud. These discussions create opportunities to revisit concepts, clear upwards misconceptions, and enhance awareness of the idiosyncrasies of the examination questions, especially with regards to language.

Most of the activities are low or no prep, though here and at that place a few activities need some pages printed ahead of time. Be sure to read through an activity earlier facilitating it in your class so you don't take hold of yourself unprepared.

Each drove of activities is organized effectually the Texas country standards (also known as TEKS). If you don't live in Texas, you still might find these activities useful since there's so much overlap betwixt our standards and others. To aid not-Texans navigate, I've added a column that (very) briefly describes the concept associated with each activity. If you lot're interested in reading the bodily TEKS each activeness is aligned to, cheque out these documents:

- Form iii TEKS

- Grade 4 TEKS

- Grade 5 TEKS

If you lot attempt any of these activities out with your students, allow me know how it goes in the comments. Enjoy!

Source: https://bstockus.wordpress.com/tag/staar/

0 Response to "Level of Difficulty of Questions Staar Reading"

Post a Comment